Fancycache Keygens

Feel free to post your SuperSpeed SuperCache v5.1.819.0 Incl Patch and Keymaker-ZWT. FancyCache with a game that has a lot. Gilisoft Ramdisk Websites. Download PrimoCache. There are two editions of PrimoCache: Desktop Edition and Server Edition. If you are going to install PrimoCache on Windows desktop OS like. FancyCache is a supplementary software caching. Using FancyCache For Volume Edition Free Download crack, warez, password, serial numbers, torrent, keygen. FancyCache (Beta) Volume Edition v0.8.1 Size: 1,626KB Update Date: 2013-06-21. SuperSpeed Software offers powerful solutions to systems engineers, system administrators and power users whose Microsoft® Windows systems are bottlenecked by disk I/O. PrimoCache Overview - a supplementary software caching scheme to.

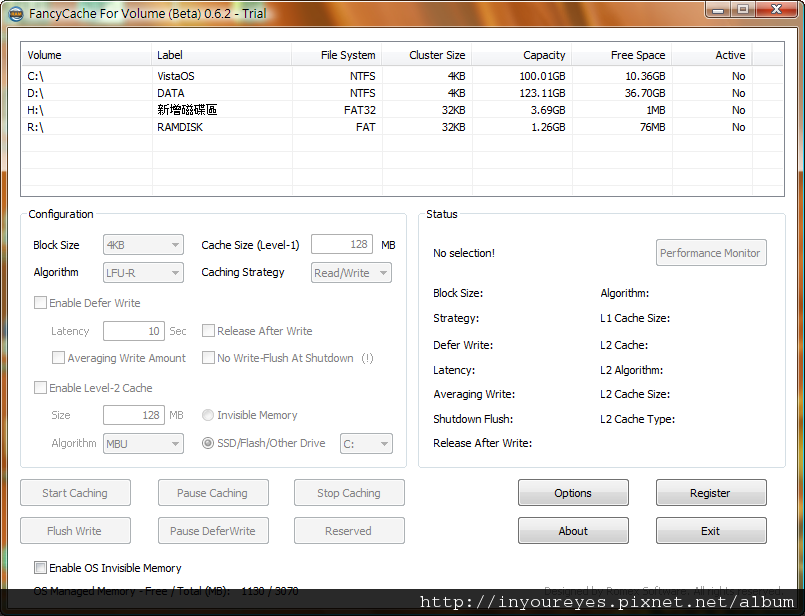

I'm currently using 4 GB of RAM as a RAM-cache using FancyCache, it's caching my SSD. It's working very well with games as load times are almost zero, it's also working well with the Adobe suite.

It's decreasing the usage of my SSD as well, which is a good thing. I have 16 GB of RAM in total and my question is, would you say that getting 16 GB of additional RAM and using it for FancyCache would be beneficial? Convert 2d To 3d Image Software. (I realize this question isn't very problem-oriented and if it's not the right forum for it I apologize) My computer spec: Asus P9X79 Deluxe, i7 3930K @ 4.3 Ghz, Corsair Vengence LP 4x4 GB, Samsung SSD 830 256 GB, Win 7 Pro x64. I got 48GB RAM (X58 platform with 6x8GB sticks), and I dedicated 16GB to FancyCache under Win7. FancyCache comes with a built in monitoring. The monitoring of utilization is by far, the most crucial thing you can do to make that determination.

I am using this box to crack passwords using large dictionaries (~400GB), so I was hoping to at least keep in RAM some of the most frequently used dictionaries. To my surprise, because of how my scripts were set up, I was looping over all the same set of hashes with different dictionaries, effectively 'dirtying up' my cache by continuously reading in new dictionaries. As a result of that, my cache hit ratio was abysmally bad (something like 0.1%). So I flipped the order like this: from for hash in hashes; for dict in dicts; crack hash with dict to for dict in dicts; for hash in hashes; crack hash with dict` This way the dictionary that was just read stays in memory for the next set of hashes, and the next read occurs from RAM not disk. This little order-flip woke up the read cache, as now it averages around 80%. That one observation was worth in gold.

Furthermore, limiting your FancyCache to work only on disks with the data you know that are going to have heavy IO, and not relying on the OS to figure it out, is also quite beneficial. Second best thing I did, was observing the write caching behaviors. Turns out, some programs are playing it safe, and try to dump data after every little chance, instead of merging multiple writes into less frequent but larger writes. When done in fully synchronous fashion, this caused the program to block until the write was complete. My box is on a UPS, so I don't need to worry about blowups before the write cache completes the write-back. This hugely helped with the raw performance of my main program, but it also visibly helped with smoothness of the system, as the system would not come to a halt every time the write cache was busy committing dirty buffers to disk.

Of course, without a UPS (or a battery if it's a laptop), this would be a dangerous scenario. All these things come to a fundamental CS problem if 'working set' size. Delete Memeo Backup Files. Crack Puk Code Vodafone.